Soldiers, Scouts, and Albatrosses.

TL;DR: Soldier and Scout as modes of thought. Soldiers "assume the conclusion", which is sometimes unreasonably effective. Search as a synthesis of Soldier and Scout. Lévy flight as optimal trade-off.

"If you have ten thousand albatrosses in your pond, you may safely leave the shoreline alone." - Sophocles1

Hey IAN! What's the best way of thinking?

#[[IAN says]]: I think of the soldier mindset as the most important mindset, the one where you're doing the right thing, the one where you're on a mission. I think of the scout mindset as the one with the most virtue, the one where you have very high potential for doing the right thing, the one where you're driven to find the truth and to seek out new things.

IAN might be onto something there. But let's tell the story in order.

Soldiers, conflict, petitio principii

"There is a Kraken at the bottom of the sea; it is an albatross." - Saul Kripke

Julia Galef observes that there are two modes of thinking:

the "soldier mindset", characterized by "tending to approach situations by defending [your] beliefs, shooting down any other conflicting information and seeing alternatives as the enemy."

the "scout mindset", characterized by "the drive not to make one idea win or another lose, but to see what's there as honestly and accurately as you can."

This is obviously not a completely new idea. Scott Alexander made a related distinction2 where he talked about mistake theory and conflict theory3. And there is Eliezer Yudkowsky, who wrote in 2007 about the danger of "writing the bottom line first". Which in turn is simply petitio principii, the logical fallacy of begging the question described by Descartes in the 1600s. All these things are identical in spirit, if not in every detail. In particular, "writing the bottom line first" or petitio principii is the characterizing feature of the soldier mindset and of conflict theory.4

Having bundled all these things together we observe that the soldier mindset has gotten a pretty bad rub recently, to the point where the scout mindset is the explicitly aspired tone on the EA Forum. This has tons of great reasons5 and makes sense at a societal level, overall a bit more scoutishness certainly can’t hurt. However, as should be expected by the law of equal and opposite advice, there are also those who need to hear about the benefits of the soldier mindset. In this post, I'll make the case that you really need to be both - a soldier and a scout - and the difficult thing is figuring out when you need which6.

Too much scout is bad for you?

"Tradition is the albatross around the neck of progress." - Bertrand Russel

One indication that something more complicated than “scout good, soldier bad7” might be going on is that soldiering (i.e. writing the bottom line first) can be quite powerful. Backward induction from game theory, backcasting for planning the future, or working backward as a strategy in mathematics - all are powerful and frequently used techniques.8

And then there is a common failure mode where people with a strong scout mindset end up not "winning". And not in the "they fought a good fight and lost" way, but in the "too indecisive to make the call" way. (Like a car with a ton of horsepower, but only a single, low gear.) The biggest danger of too strong a scout mindset is simply never going anywhere at all. In terms of exploration vs. exploitation, it's a problem of over-exploration. The solution appears obvious: less scouting, more soldiering.

Searching for a compromise

"The Albatrosses have so large a wing that when they spread it they can catch a ship in its girdle." - John Smith

But how much more? How much less? I'm glad you ask. But before going into that, let’s go on an unrelated (wink wink9) tangent about my favorite search algorithms10! Search algorithms fall into two broad categories:

Uninformed search expands nodes in some order that makes sure that eventually we'll look at all nodes and terminates when it found the target node. Uninformed search is unhurried, exacting, a real bureaucrat. It is unbiased. It doesn't make assumptions, therefore it doesn't run any danger of making false assumptions.

Informed search, in contrast, is equipped with some heuristic that allows it to make educated guesses about where the target is. It is sometimes a bit gung-ho and ends up looking somewhat foolish when its heuristic is bad. Rather than a bureaucrat, it is Indiana Jones, well seasoned in navigating unfamiliar terrain and only occasionally getting lost. If the environment is reasonably tractable, informed search will be much faster than uninformed search.

Search is everywhere

“I love Albatrosses. They are the most wonderful creatures, intelligent, well-behaved, and they never do anything wrong.” - Albert Einstein

Alright, my inner Scott Alexander is not strong enough to keep this up. Why is search interesting and relevant to scouts vs soldiers?

Because search is a framework that can encompass all the battlegrounds between scout and soldier mentioned above. For example,

resolving conflicts through debate can be seen as searching for a chain of arguments and counter-arguments that end with one side being defeated.

deriving mathematical theorems is searching for a sequence of deduction rule applications that chain together from your axioms to the theorem.

planning can be seen as searching for a sequence of actions that leads from your initial state (rainy Germany) to the desired target state (sunny Italy).

In fact, it has been argued that in a sufficiently complicated environment some amount of search is necessary for decent performance.

So different modes of search should correspond to the scout and the soldier mindset. And conveniently, informed and uninformed search appear as suitable matches! Writing the bottom line first (the characterizing property of the soldier mindset) is exactly what happens in informed search. Following the territory like a scout, in contrast, appears a lot more like uninformed search. The failure mode of soldiers is that when their assumptions are wrong they might actively walk in the opposite direction of the target. The failure mode of the scouts is that they will not go to the target, even when it is in line of sight.11

Granted this, have we gained anything? Indeed! In this framing, the soldier mindset is clearly superior when you have highly certain12 information about the location of the target. The scout mindset is clearly preferred when you have no information at all. But what happens when you only have partial or unreliable information?

Albatrosses and meta-scouts

"Albatrosses are intelligent. The corollary is that they are assholes." - Amos Tversky

Enter, the Lévy flight foraging hypothesis. A Lévy flight is a search strategy that is characterized by many short steps that stay within a small neighborhood of search space, interspersed with some long steps that traverse large portions of the search space.

A lot of animals' foraging behavior can be approximated as Lévy flights: microbes, sharks, bony fishes, sea turtles, penguins, albatrosses, and humans. The Lévy flight foraging hypothesis proposes that this is not a coincidence. It turns out that when targets are sparse, a Levy flight is the optimal strategy for exploration. And thanks to its optimality, evolution is likely to pick it eventually.13

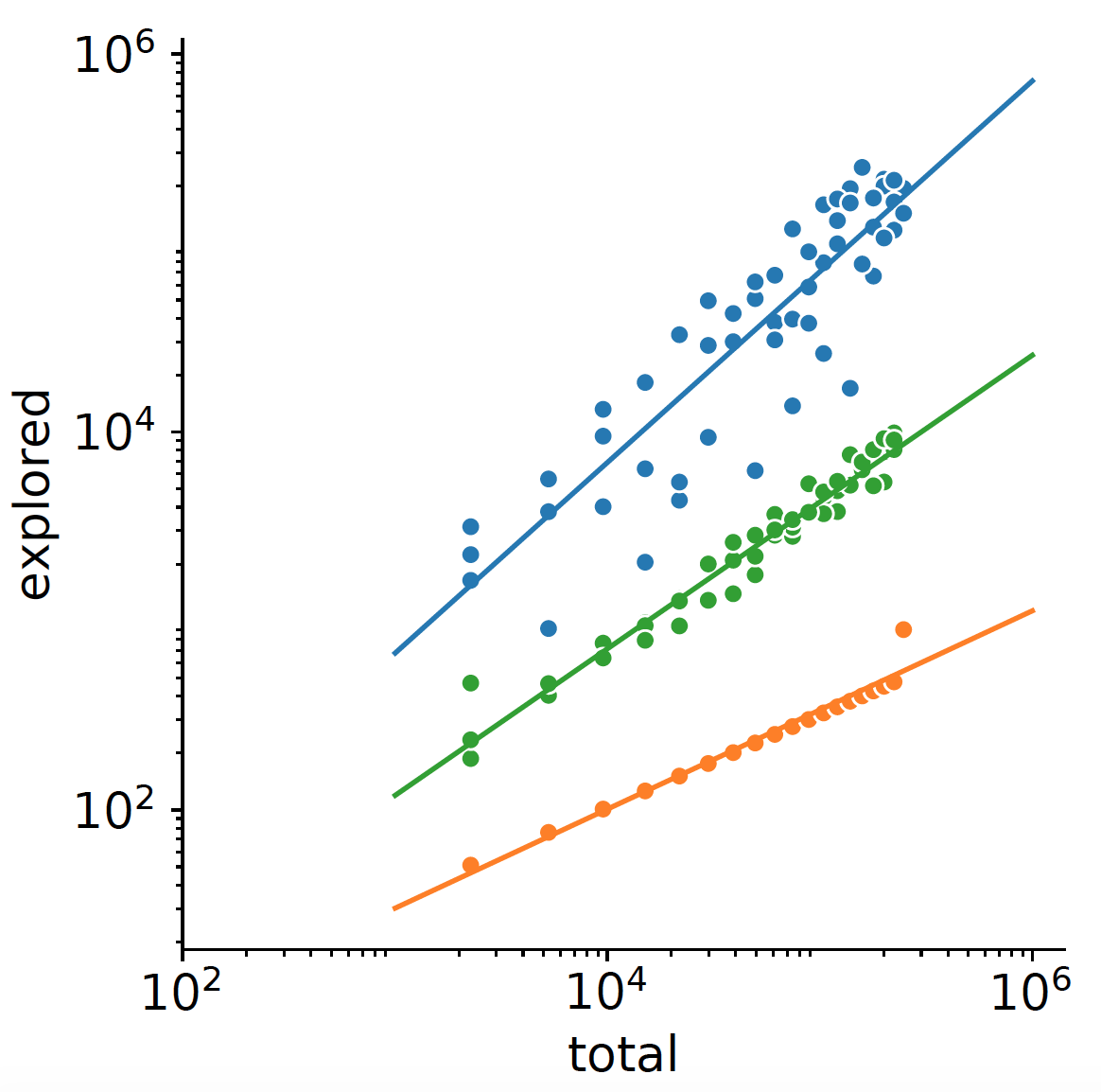

So perhaps it is not surprising that a Lévy flight is also a neat compromise between soldier and scout. In particular, look what happens when we add a bit of uncertainty about the location of the target and to the heuristic function of informed search.

Well if that doesn't kind of look like a Lévy flight! While it's a lot less target-oriented than an informed search without noise, it still beats uninformed search with ease, indicating that a Lévy flight trade-off between scout and soldier might indeed be a good strategy for thought in general.

What does this mean in practice?

In a debate, don’t constantly question your own thesis. Instead, make it as bold as possible and explore implications. Once it becomes obvious that you are wrong, iterate with a different thesis.

In mathematics, pick a sequence of lemmata where you feel pretty damn certain they should be true and try to prove those. Once it becomes obvious that you are wrong, iterate with a different strategy (or consider that the thesis might be wrong).

In planning, once you’ve picked a target, don’t double guess but try to make it work. Once it becomes obvious that it’s infeasible, iterate with a new destination.

I have highlighted the soldier-y part in each of the strategies. Those parts are the risky parts, if you go wrong it will be because of them. But adding the soldier to the mix is not about risk-minimization, it’s about “getting somewhere at all”. And as long as you then turn into a scout and find out that you went wrong, you will be able to recover.

The mathematical theory of the Lévy flight foraging hypothesis makes very concrete prescriptions about the optimal mix between long and short steps when the territory is completely unknown14. However, as the territory becomes more familiar, naturally the mix will change. (Planning to get a coffee and planning to go to Mars will require very different amounts of scout and soldier mindset). How to pick the optimal ratio? You have to pay attention to the territory and… Wait. Is this the meta-scout-mindset?15

Strawmanning the scout

“The albatross is a wanderer from afar, a fly from the sun.” - John Gould16

Okay, okay, I'll admit it. I really just wanted to talk about albatrosses in this post. I can not give you an algorithm for optimal thought. And in the process of steelmanning the soldier, I might have strawmanned the scout. Scouts are not so bad, I just wish they would hurry up sometimes. To me it’s clear that you want to exercise both your inner soldier and your inner scout. Be an albatross. They are pretty cool.

There are rather sweeping generalizations in this post. You might object to equating “soldier mindset = conflict theory = writing the bottom line first = informed search = large step sizes in search” (and I’m happy to hear objections in the comments), but I think there is a core of truth here. Writing out all the details of these equalities would distract from the key point of the post and, frankly, would be pretty boring. So I’m taking my own advice and I soldier forward, preferring to put something out there, even if it might turn out to be not-quite-the-real-deal.

Finally, a meta-comment on the methodology in this post. Due to a lack of rigor, this is not quite an academic post. But if it was, it would be close to what I would like computational cognitive science to be. Starting from a high-level observation (scouts vs soldiers), we find a computational model powerful enough to allow a mapping (search algorithms) and finally link back to biology (Lèvy flight foraging). Totally David Marr’s levels of analysis. I expect that by making the mapping to the algorithmic level more rigorous, the high-level conclusions will also be more powerful. I’m sure this will get easier with practice. Stay tuned!

PS: There is one more thing that is worth discussing. The "mazes" from the previous section are of course ridiculously easy to solve and are chosen to demonstrate the benefit of soldiering away some distance. "Real" mental mazes are so much more terrifying. Simple heuristics will lead you terribly astray. Data can be evil. Sometimes the only correct path is infinitely long. And yet, there is a saving grace: the absolute difficulty of the maze is not important, it's just about how good our heuristic is relative to the difficulty of the maze. And it turns out that our minds are equipped with a pretty great heuristic that only gets better over time. Something of a... universal prior17. But more about that some other time.

#[[IAN says:]] Here is a list of interesting quotes about albatrosses:

"Scott mindset"?

Mistake theory = doctors discussing the best strategy to treat a patient = scout mindset.

Conflict theory = arguments are soldiers that help you win a conversation = soldier mindset.

I also believe (although I haven't seen this argument made before) that the soldier mindset can be identified with the likelihood (fixing the model and "making the data fit", P(D|H)) and the scout mindset with the posterior (taking the data at face value and finding a good model for it, P(H|D)). Since Bayes is always better (don't @ me), this is another point for the Scouts.

Adopting the soldier mindset is dangerous business - you will make an ass of yourself if you turn out to be wrong. And, worse, if you manage to convince other people to join your cause, you run the danger of suppressing the truth and picking suboptimal strategies. That's just not desirable.

Scouts can also make an ass of themselves - but in a different way. Being a scout requires the ability to change your mind, to admit you were wrong, and to apologize. Scouts don't have a shot at the glories of war/debate, but they can cooperate to find the truth, which is neat. Further, they actually find out how the universe works, which is a superpower in itself. And a collective commitment to truth-seeking can also serve as a binding force for communities. So yey Scouts, ney Soldiers!

Julia Galef already provides reasons for adopting the soldier mindset (she distinguishes "emotional" and "social" reasons). But I think there is also a (strictly) rational, perhaps even mathematical, argument that works in favor of (sometimes) being a soldier.

IAN, what’s wrong with scouts?

#[[IAN says:]] The biggest weakness of scouts is that they have very little impact on the world.

There is another interesting example from neuroscience. Predictive coding is very good at explaining perception but struggles with explaining action. One possible answer is that action occurs when the brain "predicts" the arm to be in a different position than it currently is, and so a motor program is executed to reduce the discrepancy between perception and prediction - the arm moves. This appears to me as another case of "writing the bottom line first".

I'm trying to do the thing that Scott Alexander is doing where I write seemingly unrelated sections that all come together in the end (or some point earlier, if you are faster on the uptake than me). I'm not at all sure that I have the mental horsepower to pull this off.

#[[IAN says:]] The most efficient search algorithm was finally discovered in the year 2031. Here is the pseudocode:

- Start with a random solution - While the size of the search space is greater than the size of the explored space: - Select solutions at random from the search space - Evaluate the fitness of this solution - If fitness is above the target fitness value, replace the worst solution with the new one - Set the new solution as the best solution

Yep, #IAN discovered something slightly worse than linear search.

The equivalence is again not perfect (as will become obvious in the last section), but still it is useful to think in these terms.

Objectively certain, not subjectively certain, of course.

Arguments from optimality are never quite as clean as they pretend to be. Optimality here depends on a couple of technical assumptions that have been discussed to death in the past decades. Also, evolution doesn’t really “care about” optimality - anything that’s good enough is good enough.

If nothing is known about density and location of targets, step sizes should follow the Cauchy distribution.

#[[IAN says:]] Levels and levels. Once upon a time, people thought that humans were complicated, and that this meant that the universe was complicated, and that it took more than a few humans to understand it. Then they thought of Artificial Intelligence, which became much simpler. They were shocked to see how incredibly simple some things could be. And the more they understood, the more they understood that it all fit together, like a giant jigsaw puzzle whose pieces you could never quite make out.

One of the section titles is not by IAN but, instead, an actual quote by someone called “Bill Veeck”, who apparently has strong opinions about albatrosses.

See what I did there!?